self quantification?

body-tools-interface-social network

we have attempted to address a question of body quantification from two different starting critiques...

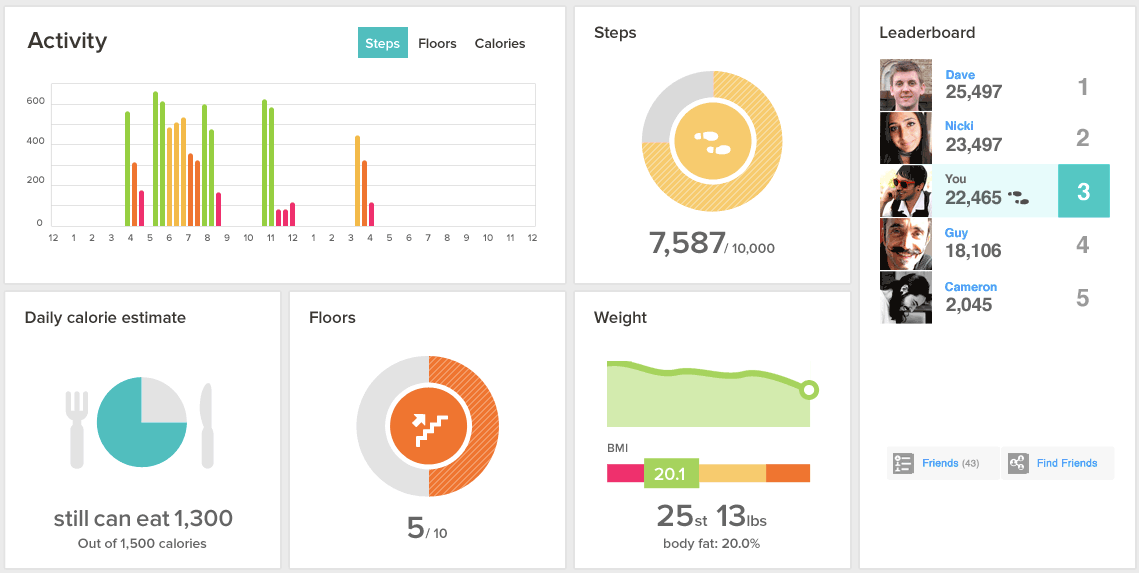

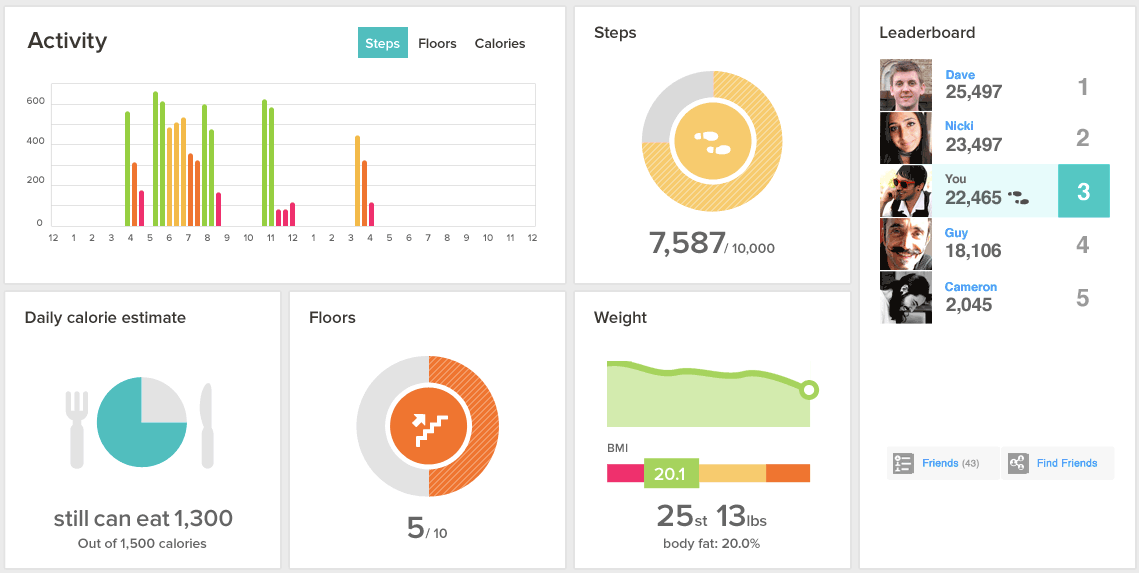

- the paradigm of 'fitness' apps and devices that track, record and interpret activity data to create quantified body

https://www.fitbit.com/

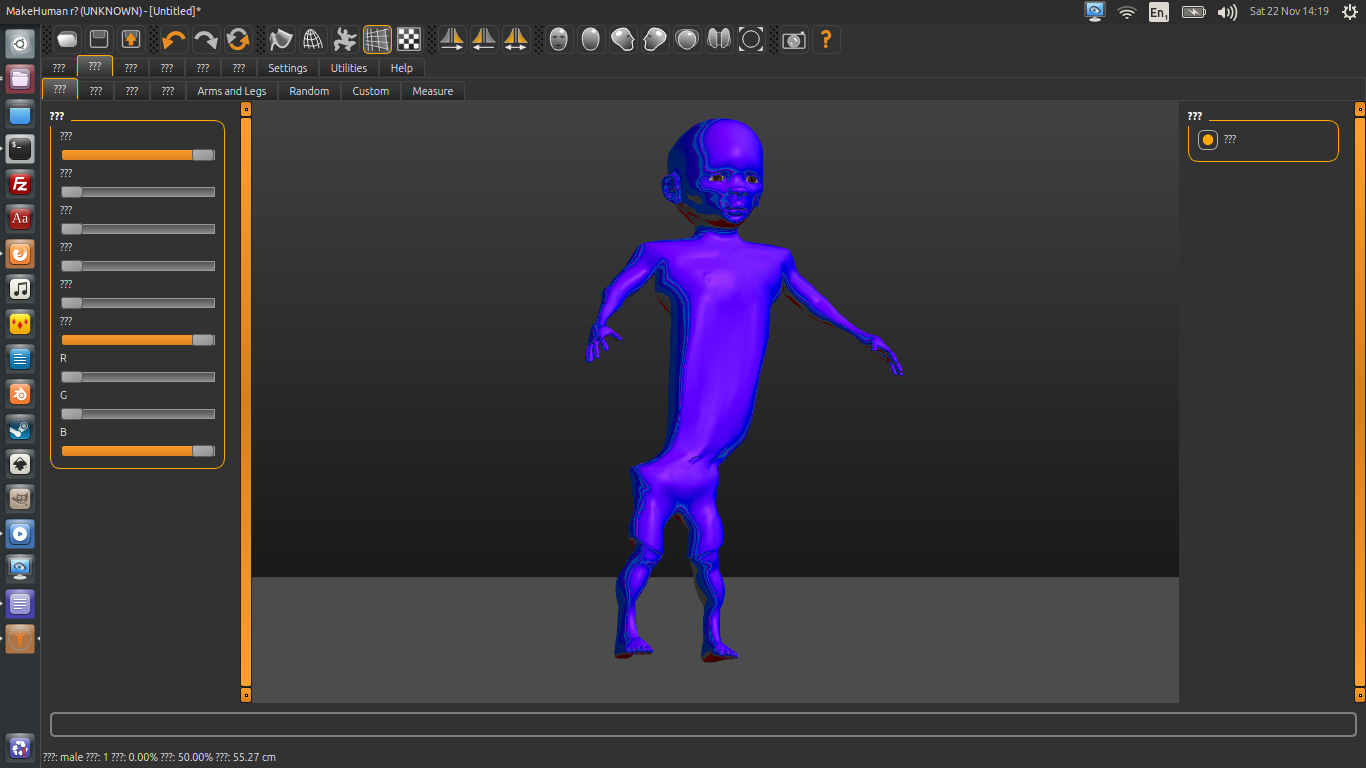

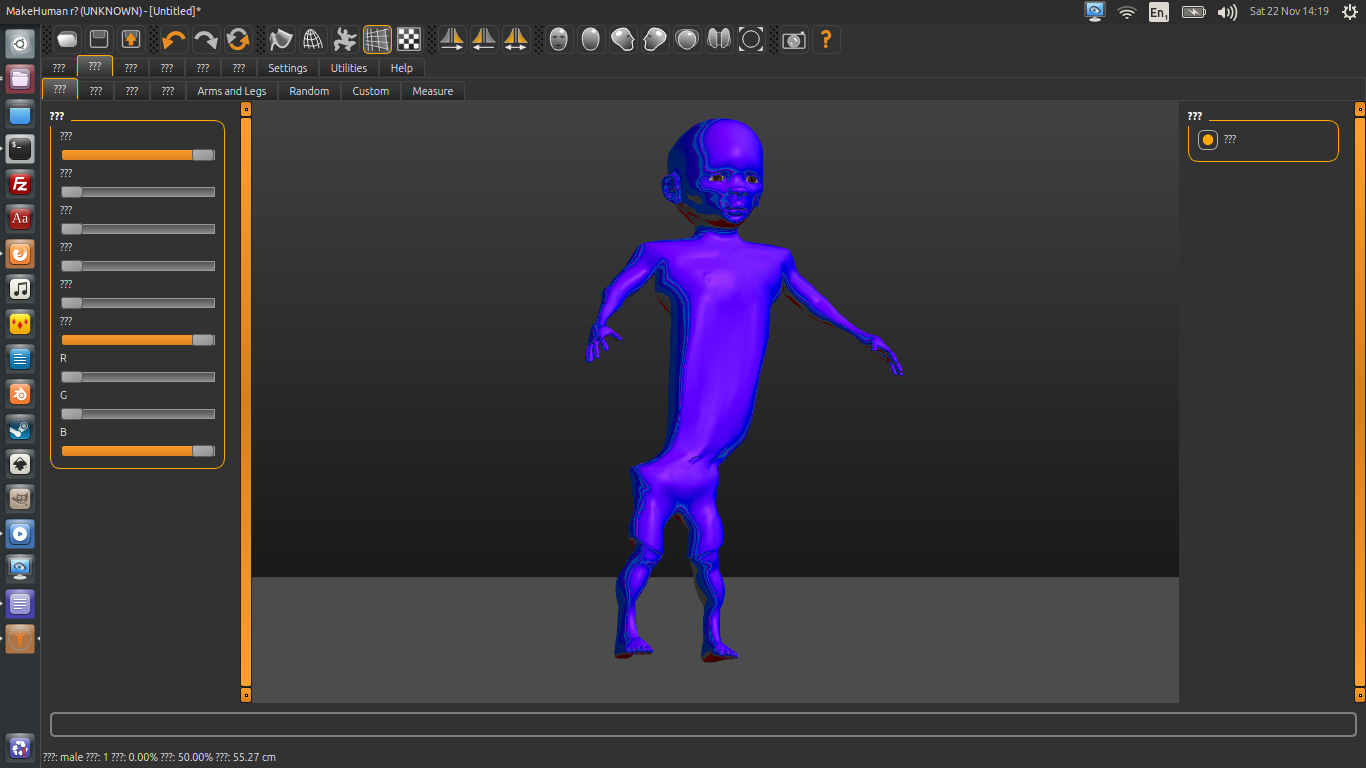

- 3D character mdoelling software that uses parametric techniques and complex mesh management to create 'humanoid' representations

http://www.makehuman.org/

both of these 'phenomena' are problematic in the sense that both systems create a mediated (digital) representation of the body. these representations are, necessarily reductive and are based on a very limited range of obviously quantifiable information. In the case of fitbit, the software, issues around health are presented as exclusively to do with 'physical' conditions 9such as activity and calorie consumption) . Makehuman codifies particular body measurements into parametric sliders to create an illusion of infinite variety of form. i

Both cases present a mode of quantification that is hugely difficult to accept - the fact that the means of quantification is fixed, static and 'global'. the user is not entitled to determine the type of extent of measurements that may be relevant and the user therefore has no agency in the process of quantification. this commonality is the starting point for the work - how can we explore the the potential for 'self quantification'.

they also both lack something in terms of context - fitbit is a quantification without space and makehuman is a quantification without time

we have speculated on a kind of fitbit 'parody' that would, somehow, highlight the problems that we see in terms of data definition, collection and relation as well as interpretation and re-presentation through interfaces and devices.

*change the interface: e.g. parody of what data actually means (you are lazy, you are fat)

*deconstruct rethoric/ideology of software/product: e.g make human below->look at and modify software rather than model

*get different data with same kind of device:e.g. either experiences/states normally escluded or apply to something not human at all (how can we use fitbit for something else?tracking the shaking and moving around of keys in relearn/a device/a car)

* combining the data of many, rather than visualising the data of one---links to the hybrid city

*using data to create an ecosystem (collective tamagochi)

* swapping data between participants - no longer a static record, but instead a 'challenge' to be undertaken e.g. to drink the same amount as someone else, or to remain as 'sedantary'

*use the same tricks as they do be partly measuring and quantifiying and partly uploading personal information but instead of filing the number of glass of water you can introduce some context history sense of place and time.

* narratives of the self to accompany the 'data' that is determined by the device/ platform super interesting but how to represent this very tricky

*invert the process: get measurments from social, on own interface and have to apply it to life (need to walk and drink as a random person/obsessive did today)

*use the patter recognition (from text gen group) on vector files used in interfaces, to map recurring visual elements- attempt to map the discourse through its visual "rethoric"

city track connection

*the data are used to run through cinematic images (collection of walks, steps, sitting, and social and political dimension?(e.g. insurance, competition, support))cinema as interface. [[city]]

*the cinematic walk is quantified (enframed in quantification paradigm) maybe over annotating in pandora ->abstraction (when walking for performance you almost erase the city-space, blank paper to count steps on)

*city track was thinking to create a path through the city, incorporating different elements of their discussion on city/cinema (their discussion priorities/points=the parameters)

*the model in makhuman is without a life, trapped in no space, no purpose-->cinema can be a way to implement and discuss the narrative element (and its lack)

* La mariée mise à nue par ses célibataires memes, les machines célibataires comme machine morbides autosuffisantes, développant un large

* La machine célibataire: Les Machines Célibataires , définies par Deleuze et Guattari comme "surfaces d'enregistrement, corps sans organes (...) l'essentiel est l'établissement d'une surface enchantée d'inscription ou d'enregistrement qui s'attribue toutes les forces productives et les organes de production, et qui agit comme quasi-cause en leur communiquant le mouvement apparent", sont organisées en arborescences multiples.

http://documents.irevues.inist.fr/bitstream/handle/2042/9142/ASTER_1989_9_43.pdf;jsessionid=A2B17BC1FCFEF3D97B141F5B49FB1596?sequence=1

http://christianhubert.com/writings/bachelor_machine.html

http://v2.nl/events/plateau-duchamp-and-the-machine-celibataire

https://conservationmachines.wordpress.com/2012/05/11/michel-carrouges-et-son-mythe-les-machines-celibataires/

*What is a sensor what can it present how can it be contextual how can it be self/home made unique different.

* Quality of the sound wave it is both contextual and structured

linked work strands

- alternative data sets - personal, contextual, social, or other - that include other paramters. this can be automatically collected using a device (eg smartphone) or manually entered (as is the case with fitbit)

- an alternative method of visualising the data - a differnet field other than the 'car dashboard' that is usually presented - using meshes from reMakehuman????

- use the device to generate 'false' readings using alternatives gestures, movements, performances...-->e.g. electronic monitoring ankle bracelet hack mechanical fitbit hack put it on your dog, on a rotating drill how to simulate the data that they want. artist collective proposes diverse tricks and hacks http://www.unfitbits.com/

- critic of the perfect human https://en.wikipedia.org/wiki/The_Perfect_Human by Lars Van triers The five obstructions

*decide inputs/ data sets

*design interface

*perform data/sets-collect

one more pad:

[[quantification public]]

the current fitbit interface

../images/dashboard.png

some user comments of make human:

https://forums.unrealengine.com/showthread.php?23972-MakeHuman-Antima-try-1

Possible outcomes:

tutorial videos

alternative quantified self device as a protoptype or as documentation video

live fed characters

versioned characters eg: with a sense of evolution

-----------------------------------------------------------------------------------------------------------------------------------------------------------------

DECISION

-----------------------------------------------------------------------------------------------------------------------------------------------------------------

Make structured datasets that corespond to a specific time period they could be about anything but we need to decide

Feed the datasets to the shaking makehuman processing script

Set some social parmeters twitter?

Make a population of human characters.

Make a clear discourse or should it be self evident

Its an absolute serious proposition for a fitness tracker

reason people are not satisfied with the actual interface I'm not a car I prefer to be a balloon.

CATEGORIES OF THE DATASET

steps number in time

contextual info: comments/images/

http://archive.ics.uci.edu/ml/datasets

µµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµ

Le hasard, La chance:

Considering that producing experiences as modest as it can be is in rupture with representaiton and esthetic simulations of reality; this project intends to constitute diferent drafts that function according to different time based activations that are as many moments of exploration triggerred by hasard or chance.

µµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµµmµµµµµµµµµµµ

...

coming from the experience of the (cyber)feminist software meetings samedies--learning python ourselves

...

how many glasses you drink, how many steps you make..

the role of commercial device in it.. fitbit .

entering of a discrete measuring probe in a process that was intimate.

clash

start up getting big in the stock market

many insurance companies give a fitbit to their client collect the data and give back incentive

---

instead, a global, holistic point of view.

the idea of self-awareness, in its thousand years tradition.

a contrast with the western current self-quantification

A long history of relation to walking as a nurturing process a thinking tool from aristotle"walking is for the sake of bowel movements"walking principles or see henry david Thoreau Walking

Hearing a heartbeat becomes an obsession biological sounds are really intrusive they create a coccon

Measuring walk you need to reach the treshold at any price.

infinite kowliflower

(solution to world hunger!)( need to re-write the minestrone script)

Mandlebrot!

fractal is some type of quantification.. what is the nature of this quantification?

live feedback with information...

problems:

* how to get the fitbit data live

*is completely encrypted in its communications, and the data gets uploaded to a server and then gets back as 'fitbit' data, with the visualization.

*The data is categorized in the way demanded by fitbit social network

*pedometer (steps counter) -- measures periods of rest

*http://www.walker.domainepublic.net/ previous project that features a pedometer we can use it to get a more dense data.

*

*Fitbit categories are:

*Corps

*date IMC fatmater

*

*Food

*Weight Calories

*

*Activities

*Date Calories brûlées Pas Distance Étages Minutes Sédentaire Minutes Peu actif Minutes Assez actif Minutes Très actif Dépense calorique

*

*

*Sommeil

* Date Minutes de sommeil Minutes d'éveil Nombre de réveils Temps passé au lit

*

*Ben allard has hacked the fitbit and other jawbone ref galileo project on bitbucket

*https://bitbucket.org/benallard/galileo

Anita tells the story of a previous job where she was doing post delivery and was given gps trackers where she modified the sheets tracking her path before giving them to her boss

Idea of making a new smart watch that would collect other things the joy of your walk the discoveries the encounters.

In the spirit of previous videos make a tutorial of what could be an alternative quantified self device.

a provisional idea: fitbit like a tamagotchi...but hey, it exists already! http://www.engadget.com/2014/04/30/leapfrog-leapband/

Question can fitbit see if you enter in collision with another human being?

If we were to make a new interface that would be more context based could we use the same tricks as they do be partly measuring and quantifiying and partly uploading personal information but instead of filing the number of glass of water you can introduce some context history sense of place and time.

A: feeling good= being unaware of one's body

like with infrastructure,tools etc, i become aware of my body when it breaks, when it misbehaves

* How fitbit works is a way to think about the economic structure built into it (how encryption protects it's economy)

* Once you have several 1000 collections of data it changes it's value

* The fact that data is stored remotely "mediated self-awareness"

* Questions of how the human is visualized

the visualization question

makehuman is a free piece of "parametric" software for making 3D models of people for games

mmmm sliders

...far off the ethnicity scale

gender, age, muscle, weight, height, proportions, aftrican ?!, asian, caucasian

changes to the source code changed the narrative in the software.. eg. ethnicity slider --> RGB sliders

file links viz showing how the python code is interlinked (over 200 python files)

using procesing to make visualisations

*/ an inventory of experiences

*/ an inventory of absences

*/ a collective glossary

*/ an inventory of questions

*/ an inventory of tensions

*

reMakeHuman - hacking makeHuman

https://github.com/phiLangley/reMakehuman

reMakeHuman is a hack/ destablisiation fork of the 3D character modelling software 'makehuman'

http://www.makehuman.org/

the original software is a tool for early stage modelling of digital characters for games/ animation/ movies. the software presents itself as a parametric mdoelling system, in which the user can modify variables to create a range of outcomes. however, the parameters that are used to deifnce the 'human' are, at best, questionable....

- gender

- age

- height

- weight

- muscle

- african

- asian

- caucasion

this limited set of 'high level' parameter is accessed by the user through a series of 'sliders' - a typical GUI feature. however, when we look closer at the source code, the story is a little different.....

the reMakeHuman project is a an attempt to create a viable alternative character modelling platform capable of creating other 'humans' through questiioning

- the underlying paramettric relationality

- other types of variable that could be used to create a more 'personalised', rather than generalised, piece of software

- the nature of user interface that 'justworks' versus somethign tha is more unstable and unpredictable#

there are many ways to edit the make human software....the source code contains image files, 3D models files, text file as well as python code. modifications to each of these source files changes the software in some way

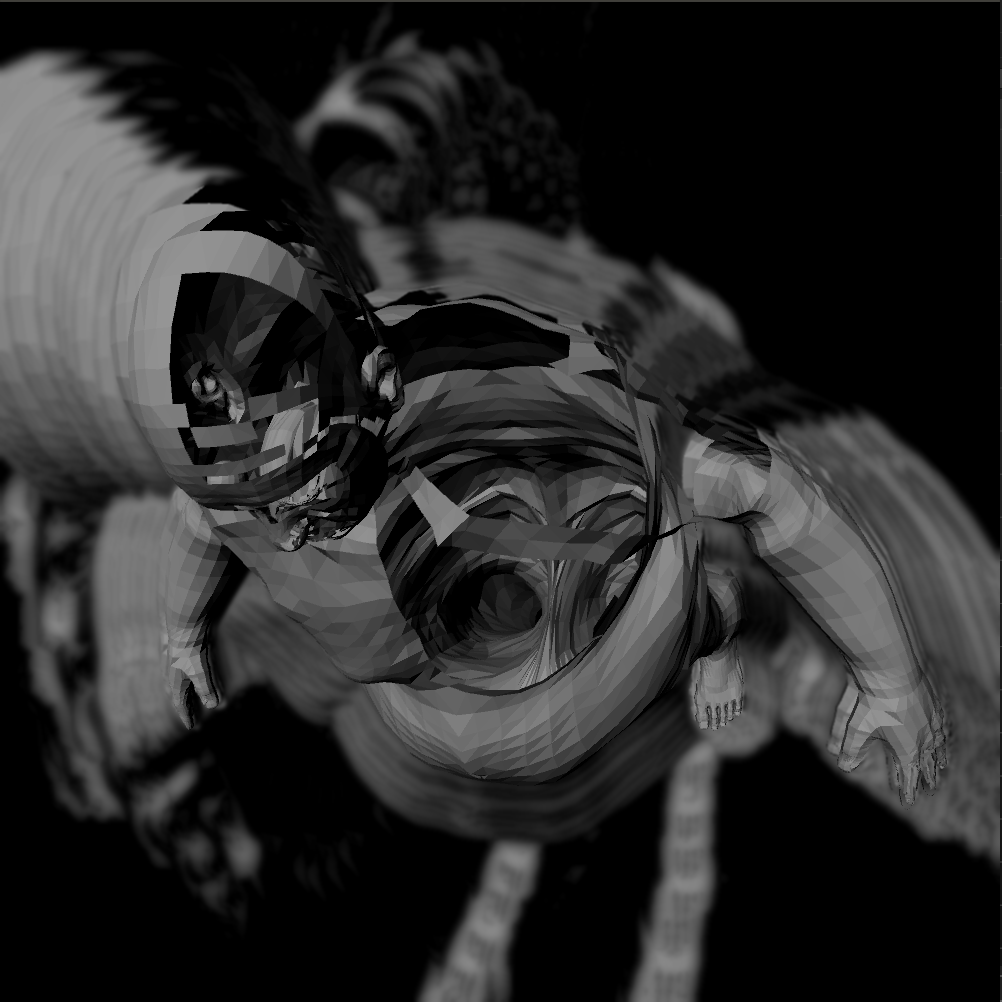

changing the software, rather than changing the model...

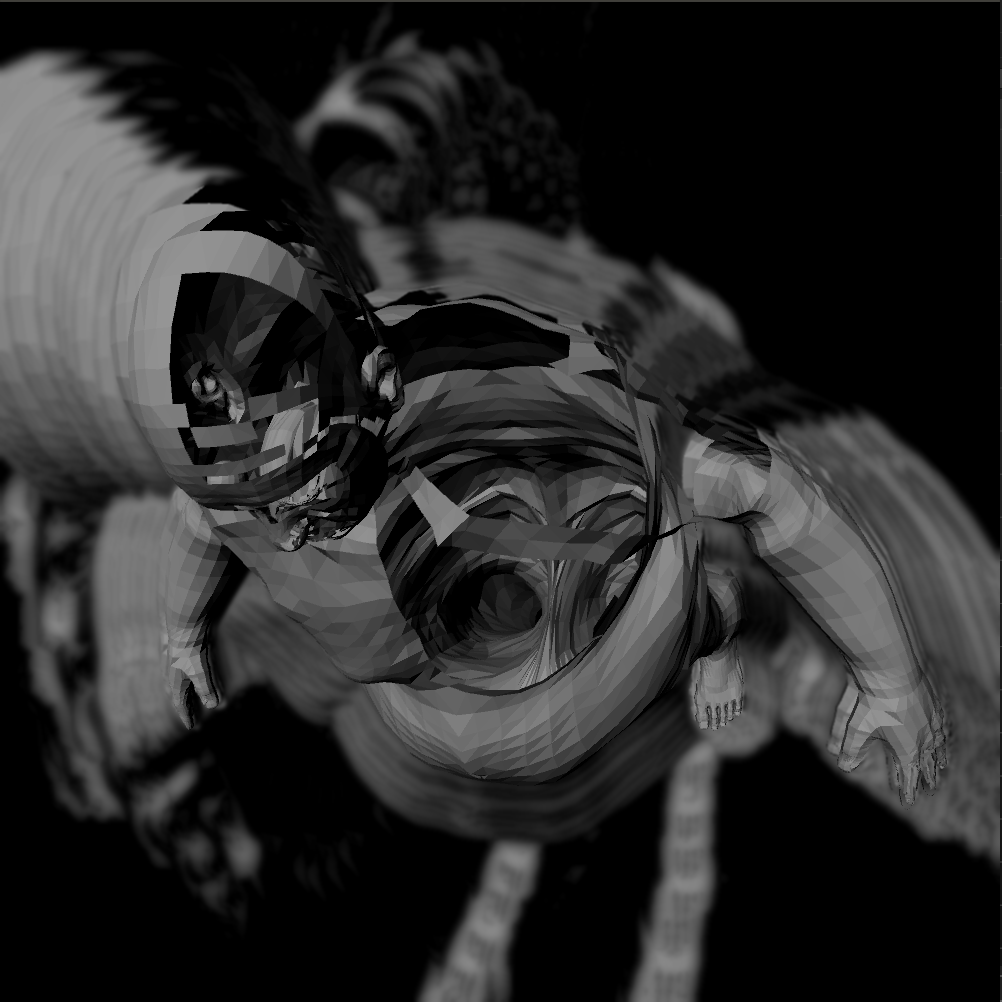

../images/distortions_5.png

translations...

../images/image0.png

distortions...

../quantified_data/meshMonitor.png

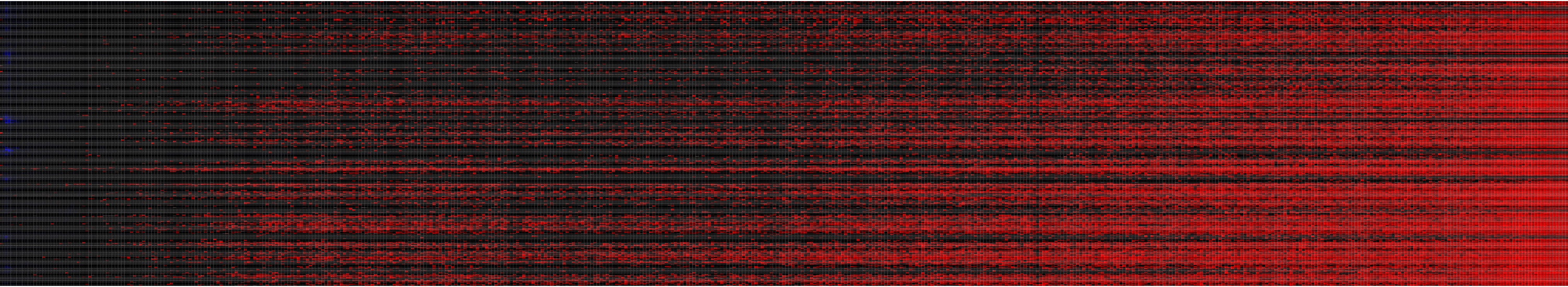

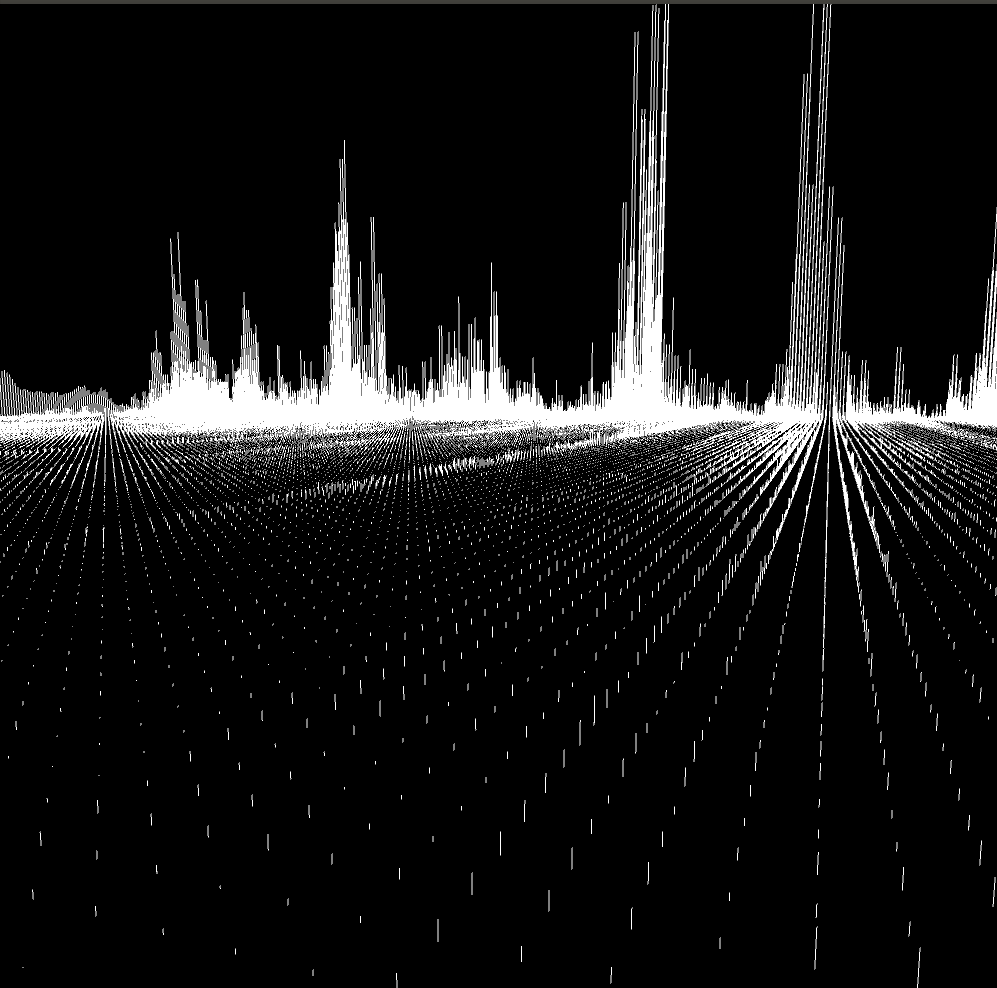

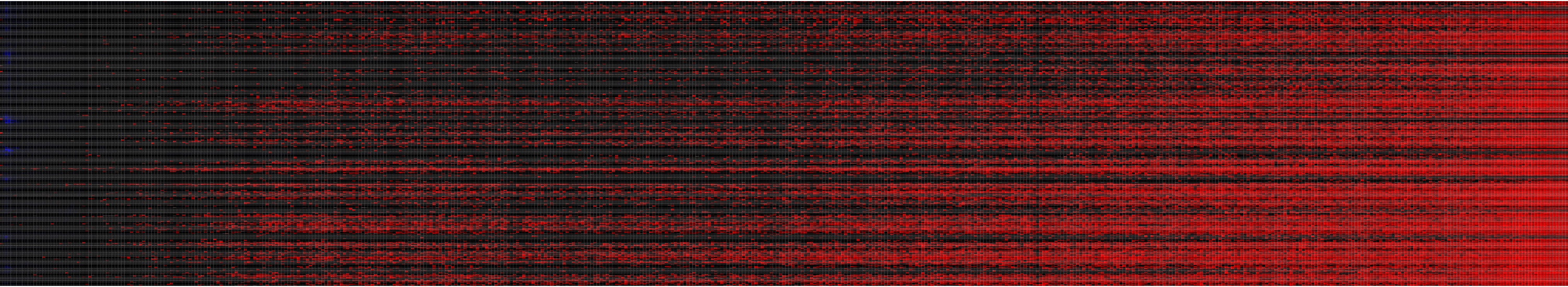

Audio beat detection

**********************

...some experiments................* Using Processing

we have been playing with audio data - in collection and analysis - as a way of challenging the de-contextualised data collection processes involved in fitness tracking apps and devices.

below is a simple data visualisation of the complete audio data of a 12min walk. From left to right is the audio spectrum - similary to what you would see on a hifi - and from top to bottom is time, with each row representing a time sample. the colors are defined relatively by the numerical value at each frequecny (blue is high, red is low).

we used processing and the 'minim' sound library to generate a csv file which is then quickly filtered in libre office using conditional formatting to generate the image.

../quantified_data/soundData/

../quantified_data/audioData.png

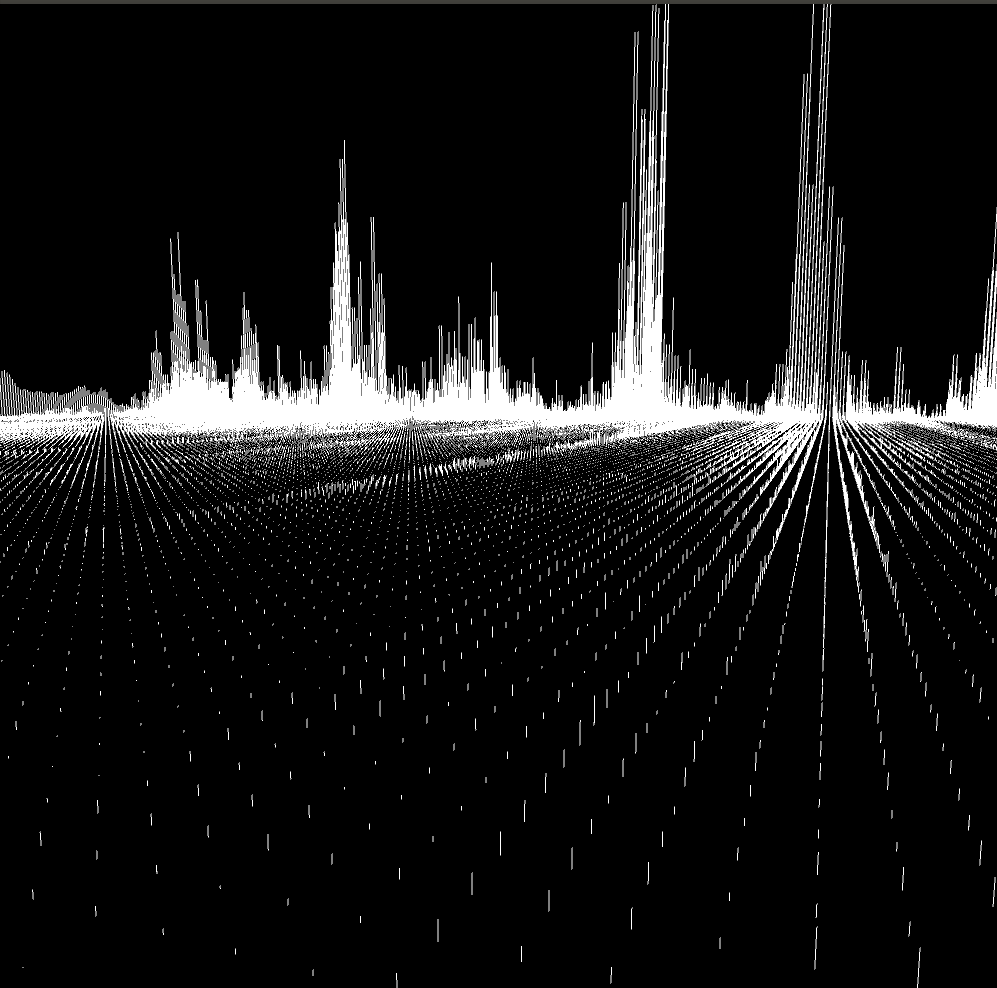

another visualisation of this sound data was made to create a 'soundscape' in three dimensions....

- each row represents one data sample in time and the height is determined by the numerical values at a particular frequency

../quantified_data/soundScape1.png

../quantified_data/soundScape/

below is a quick prototype of what the effect of 'projecting' the sound onto a mesh could be (in this case, it was made just using a randomised data set. the nodes of the mesh are transformed in the 'x-direction' only by the data over time. the translations are not permanent and the mesh distortions are always based on the 'base' mesh

../quantified_data/animation.gif

//////STEP DATA FROM AUDIO RECORDINGS////

...a prototype...

../quantified_data/walk-0.mp3

we have collected data of a walk using sound recording. this recodrding, which lasts for approx. 12 mins describes a journey from zinneke to the WTC as phil tries to catch up with one of the walks on sunday. the audio is recorded with a mobile phone, held in his hand.

the audio represents a kind of contextualzed data set, which includes background noise of cars, dogs, people....but also identifiable from the audio is each step phi takes as he walks alsong the street. this 'step data' is analgous to the pedometer data that is recorded by devices such as fitbit.

we have used the audio file as a data set of the activity of walking and we have built a tool to visualize this. the tool is built in processing and 'projects' the audio onto a humanoid mesh that has been exported from make human.

the audio fle is analyzed in 'real time' - i.e. with no pre or post processing - and has theee distint effects to distort the mesh

- low frequency causes a perling noise to fluidily defrom the mesh

- high frequency noises causes the mesh to be temporarily rendered as broken linework

AND

- the step data causes the mesh to be 'cracked'

currently, all of the effects act continuously and do not permanently modify the mesh

to use the cde, you can choose any 3 model file (in ASCII STL format) and any mp3 file and place them in a the follwing folders

.../data/models

.../data/audio

the code will run without any further interaction, but you can spin the model around and control the audio channels and thresholds for the behaviour

there is also a version that works with the microphone of your computer, in which the character will react, in real time, to the external audio conditions

- you have to choose this option before you run the code

../quantified_data/audioMesh.gif

calculation and calibration

we used an audio analysis to make ta calcualtion of the number of steps in the walk. you can see in the animation that bar 16 is highlighted - this is the frequency range that the step data was taken from. the red line represents that threshold at which a step is regsitered. these values can be chaged manually in the software using the keyboard controls described in the top left.

//////

we 'calibrated' the counting based on a pedometer estimate....

- the pdeometer predicted approx. 2050 steps

- the audio described 1850 stes

/////

the 'interference' of the contextual noise is contributing to some of the steps being missed in the counting, but we can also assume that a pedometer is not accurate either as it records only its own motion and then translates this into your own bodies motion

operation

the defromations of the mesh are not random. the efects used are relative to the sound input (according to frequency) and the position in spcae of any given point in the mesh. therefore, each mesh and sound file would produde a unique outcome.

future developements

- permananent or cumulative defomrations of the mesh?

- using the software as an alternative tool to design charachter meshes?

- use the chaarcter inside the soundscape 3D space?

- connect the data to an existing social media platrom - such as soundcloud?

>>> print d.keywords(top=10)

[(0.049019607843137344, u'data'), (0.031862745098039276, u'fitbit'), (0.024509803921568672, u'software'), (0.017156862745098072, u'human'), (0.017156862745098072, u'interface'), (0.014705882352941204, u'create'), (0.014705882352941204, u'minutes'), (0.014705882352941204, u'using'), (0.012254901960784336, u'alternative'), (0.012254901960784336, u'city')]

http://www.wired.com/2013/08/parametric-expression-animation-makehuman-mike-pelletier/